Architecting a data lake with Amazon S3, Amazon Kinesis, AWS Glue and Amazon Athena

Architecting a data lake with Amazon S3, Amazon Kinesis, AWS Glue and Amazon Athena

Reason for building data lake

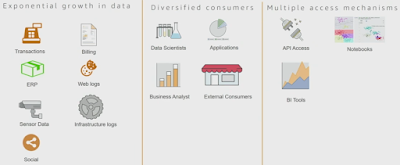

Characteristics of a data lake

1. Collect Anything: Ability to collect anything2. Dive in Anywhere: Ability to dive in anywhere, that means any granularity

3. Flexible Access: Flexible access mechanisms such as BI tool, Spark, ML algorithm and R (Raw access / API access)

4. Future Proof: Improvements in future

Amazon S3 as the data lake

# Identified as the best place to store data as 'Starting point' of the data lakeData can be in any form in S3, so we need to do an ETL process

1. Clean

2. Transform

3. Concatenate

4. Convert to better formats

5. Schedule transformations

6. vent-driven transformations

7. Transformations expressed as code

Metadata: AWS Glue Data Catalog (Central Metadata Catalog for the data lake)

Allows you to share metadata between Amazon Athena, Amazon Redshift Spectrum, EMR & JDBC sources

Has following extensions:

Search over metadata for data discovery

Connection info JDBC URLs, credentials

Classification for identifying and parsing files

Versioning of table metadata as schemas evolve and other metadata are updated

Data catalogue crawlers: AWS Glue Data Catalog - Crawlers (Helping Catalog your data)

Crawlers automatically build your data catalogue and keep it in sync

Automatically discover new data, extracts schema definitions

# Detect schema changes and vision tables

# Detect Hive style partitions on Amazon S3

Build -in classifiers for popular types; custom classifiers using Grok expression

Run ad hoc or on a schedule; serverless - only pay when crawler runs

Exmple :

Sensor / IoT device -> Record level data

(record temprature)

Business Question

1. what is going on with a specific sensor

2. Daily Aggregations (device, inefficiencies, average temperature)

3. A real-time view of how many sensors are showing inefficiencies

Operations

1. Scale

2. Highly availability

3. Less management overhead

4. Pay what i need

Querying it in Amazon Athena

# Either create a crawler to auto-generate schema

or

# Write a DDL on the Athena console/API/JDBC/ODBC driver

# Start Querying Data

Daily aggregation

Take the raw dataset and add Glue based event pipeline that allows to ETL the data

AWS Glue Job

# Serverless, event-driven execution

# Data is written out to S3

# Output table is automatically created in Amazon Athena

For real-time stream 'Kinesis Analytics' and 'Kinesis Firehose' give daily inefficiency count and push it into S3

This architecture has following characteristics but "no server to manage"

# Scale to hundreds of thousands of data sources

# Virtually infinite storage scalability

# Real-time and batch processing layers

# Interactive queries

# Highly available and durable

# Pay only for what you use

Comments

Post a Comment