Apache Lucene

01. Introduction to Apache Lucene

Lucene is the core framework, which Solr has adopted and extended its features and delivered it as a enterprise platform or enterprise application which other application can make use of it. Invented by inventor of Hadoop (Doug Cutting)Why Lucene?

# Lucene is a widely used search storage engine for web scale projectsHighly scalable and performaing open source search library

# Solr is a enterprise grade web search platform built on top of Lucene.

# To understand basics of search techniques and underlying storage engine of Solr

1. Most of the terminologies and most of the concepts what Solr uses are derived from Lucene.

2. Lucene gives you the core raw flexibility of tampering to the underlying APIs or the underlying mechanics or components of the Solr.

Lucene is one of the widely used search storage engine across different projects. Solr is one of them, and apart from Solr, there are other products like elasticSearch, which embed or use Lucene for search use.

Lucene is very very highly scallable and performing search library where you can use it for huge applications which is crunching terabites of data, handling numerous transactions (So don't be under impression that Lucene or Solr is for mid size application ), that is Lucene can scale to huge datasets as well.

Google search engine vs Lucene

Google search engine is very very complex product / search engine which do more than Solr or Lucene does. For example Lucene is a search library with a minimal set of search features which is more than enough for any traditional large scale application like e-commerse applications. Google search is a huge meta search engine which does lots of intelligent ranking or intelligent sorting or intelligent adds like lots of things internally. But the basic idea of Lucene and Google search engine is same. Everyting has to be indexed, and everyting has to be searched based on the index. But the complexity and the features are different.Lucene is not a framework or a library which can go and search web on its own. Lucene is a pretty simple API which can take a document and index it. If you give a term it will seach into a document sore and retrieves the document and gives it back to you. Thats the boundary of Lucene.

To use Lucene for web search, then have to use crawlers (ex: Nutch (Java), Scrapy (Python), Heritrix (Java) ) like Apache Nutch (http://nutch.apache.org/) which can go and get all the pages in web and give it to Lucene, once you give the document to Lucene then Lucene can take over from there and start indexing.

What is Lucene?

# Lucene is a simple powerful Java search library (API library) that lets you easily add search or Information Retrieval (IR) to applications# Used by LinkedIn, Twitter, ... and many more

# Scalable and High-performance indexing

# Powerful, accurate and efficient search algorithms.

# Cross-platform solution

Open source and 100% pure Java

Implementations in other programming languages available that are index-compatible

02. Exploring Lucene

Lucene is a very very highly scalable API library (or call it as platform) which can crunch easily terabytes or even upto petabytes of information on mordern high end servers in half a days to day time.Lucene is very light weight and it doesn't require huge memory heap.

Very effecient in blocked indexing or incremental indexing (it accumulates all the updates and it indexes it in periodic intervals and updating the index in the immediate trigger or immediate action when the document comes in).

Key Features

# Indexing and Searching

# Ranked searching (best results returned first. Ex: results based on low price and high rating)

# Many powerful query types: phrase queries, wildcard queries, proximity queries

# Fielded Search (e.g title, author, contents etc.)

# Sorting by any field

# Multiple-index searching with merged results

# Flexible faceting, highlighting, joins, and results grouping.

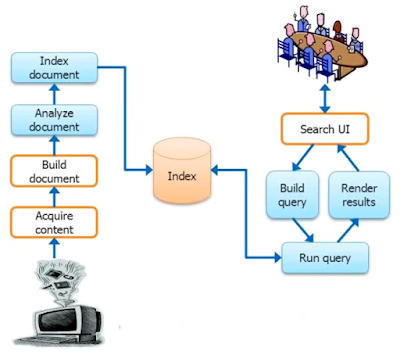

A Search system

# The first step of all search engines, is a concept called indexing.

# Indexing : is the processing of original data into a highly efficient cross-reference lookup in order to facilitate rapid searching.

# Analyze : Search engine does not index text directly. The taxt are broken into a series of individual atomic elements called 'tokens'.

# Searching : is the process of consulting the search index and retrieving sort order.

Can configure cache sizes based on memory.

Two models to retrieve the search result and sort and order based on some similarities or based on some relevents.

1. Pure boolean model : You search for aperticular term, and if the term not matched, then there is no scoring (Ranking or Sorting order of the document) done, it just retrieves those documents which is matching those term and returns those documents back.

2. Vector space model : when you write a query, query transformes into vector dimentional data structure and also the document reffered transformed into the vectors (in a multi dimentional space) and the both are overlapped and whatever is intersecting will be written as document with some similarity.

Relevency and Similarity are used for scoring or giving weightages to the document. The more the documents has weight, based on some attributes the document will aurface up in the sort order or in the sorted list.

Why Indexing?

# Search engine indexing collects, parse and stores data to facilitate fast and accurate information retrieval.

# The purpose of storing an index is to optimize speed and performance in finding relevant documents search for a search query.

# Without an index, the search engine would scan every document in the corpus, which would require considerable time and computing power.

# For example, while an index of 10,000 documents can be queried within milliseconds, a sequential scan of every word in 10,000 large documents could take hours.

What is Indexing?

The purpose of storing an index is to optimize speed and performance in finding relevant documents for a search query.

Lucene / Solr is mostly for unstructured data, i.e anything could be there in a text file (stream of text). There are used to search 'words' in the document.

Lucene - Architecture

# Indexing is the core layer of the Lucene

# Indexing uses one or more analyzer as a strategy for index writing

# The analyzer purges Lucene documents for useless contents like space, hyphen, stop words etc. depending on the choses analyzer.

# To search inside the index, the user has to provide a human-readable expression called query string.

Lucene - Inverted Indexing Technique

Inverted indexing (also referred as inverted file) is nothing but a very simple data structure which stores mapping of content or words or numbers into a database file (it is very similer to back of a book). Intension of using 'inverted index' is to expetit the searches very very fast. To obtain it, we need to spend time for indexing (cost).

# Indexing uses Inverted Index technique. (Ex : book index) Because indexes are faster to read documents.

# Write a new segment for each new document insertion

# Merge the segments when too many of tem are into the index

Merge-sort technique is used to merge the index in to the store.

# Single updates are costly, bulk updates are preferred due to merging.

You should be using Lucene or Solr by standard if you have bulk updates and any volatile or random reads (In recent updates, can use Lucene or Solr for random reads and random updates).

Lucene - Storage Schema

# Like "databases" Lucene does not have common global schema

# Lucene has indexes, which contains documents.

# Each document can have 'multiple fields'.

# Each document can have different fields for every document

# Field can be only used to 'index', 'search' or 'store' it for retrieval.

# You can add new field at any point of time.

Document : is the atomic unit (a.k.a Record) of the index and can have multiple fields

Field : have 'name' and 'value'

Raw content needs to be transformed before setting or using it in the document

Different documents can have different fields.

A field can be of any type, i.e it can be binary / string / integer

Field may

# be indexed or not

@ Indexed field may or may not be analyzed (i.e tokenized with an Analyzer)

@ Non-analyzed fields view the entire value as a single token (useful for URLs, paths, dates, social security numbers etc.)

# be stored or not

@ Useful for field that you'd like to display to users

# optionally store term vectors

@ Like an inverted index on the field's terms

@ Useful for highlighting, finding similar documents and categorization.

Lucene - Field Options

In Lucene, Field itself is a class which offers some of these aspects.

1. Field.Store

# NO: Do not store field value in the index

# YES : Store the field value in the index

2. Field.Index

# ANALYZED: Tokenize with an Analyzer

# NOT_ANALYZED: Do not tokenize

# NO: Do not index this field

# Other advanced options

3. Field.TermVector

# NO: Do not store term vectors

# YES: Store term vectors

# Other options to store positions and offsets

Analyzers

# Lucene does not care about the Parsing and other document formats

It is the responsibility of the application using Lucene to use an appropriate Parser to conver the original format into plain text before passing in to Lucene.

# 'Analyzers' handle the job of analyzing texts into tokens or keywords to be searched or indexed.

# An Analyzer builds 'TokenStreams', which analyze texts and represents a policy for extracting index terms from texts.

# There are few default Analyzers provided by Lucene.

Technically we can use only one Analyzer for Indexing or Searching.

Tokenization

It is the process of splitting the words into tokens.(eventually based on the tokenizer we use)

# Plain text passes to Lucene for indexing goes through a process generally called 'tokenization'.

# Tokenization is the process of 'breaking a stream of text' into words, phrases, sysmbols or other meaningful elements called 'tokens'.

# In some cases simply breaking the input text into tokens is not enough - a deeper analysis may be needed.

# Lucene includes both 'pre- and post-tokenization' analysis facilities.

# The list of tokens becomes input for further processing such as 'parsing'.

Pre and Post Tokenization

Pre-tokenization: analysis can include (but is not limited to) stripping HTML markup, and transforming or removing text matching arbitrary patterns or sets for fixed strings.

Indexing Analysis vs Search Analysis

# The choice of Analyzer defines how the indexed text is tokenized and searched.

# Selecting the correct analyzer is crucial for search quality, and can also affect indexing and search performance.

# Start with same analyzer for indexing and search, otherwise searches would not find what they are supposed to.

# In some cases a different analyzer is required for indexing and search, for instance:

@ Certain searches require more stop words to be filtered. (1.e more than those that were filtered at indexing)

@ Query expansion by synonyms, acronyms, auto spell correction etc.

Lucene - Writing to Index

'Classes used when indexing documents with Lucene'

Lucene - Writing Index Classes

Lucene - Search In Index

'Query Parser' translates a 'textual expression' from the end into 'an arbitrarily complex query' for searching

Lucene - Searching Index Classes

Lucene - Using API Libraries

# You can use Lucene API libraries in your java project in multiple ways

@ Using Maven (lucene-core, lucene-analyzers-common) and Run in IDE

@ Directly adding to your classpath (Command line or IDE)

Lucene - Key packages

Comments

Post a Comment