AWS Data Pipeline

AWS Data Pipeline

First look

- AWS Data Pipeline is a web service that you can use to automate the movement and transformation of data. With AWS Data Pipeline, you can define data-driven workflows, so that tasks can be dependent on the successful completion of previous tasks.

- AWS Data Pipeline helps you move, integrate, and process data across AWS compute and storage resources, as well as your on-premises resources. AWS Data Pipeline supports the integration of data and activities across multiple AWS regions.

- Data Pipeline manages the annoying details so that you can focus on the business logic.

Where does the data live?

# Amazon S3# Amazon RDS

# Amazon Redshift

# Amazon DynamoDB

# Amazon EMR (HDFS)

# On-Premise

What data do we have?

# Historical web server logs for all users# Near real-time purchase data for registered users

# User demographics data (from signup)

# 3rd party IP geolocation data

| Input Datanode (with precondition check) | Activity (with failure & delay notification) |

|---|---|

| A directory in S3 bucket | SQL query |

| Dynamo DB table | Script running in EC2 instance |

| Table on Relational DB | EMR job |

| On-premises |

Where can you run activities?

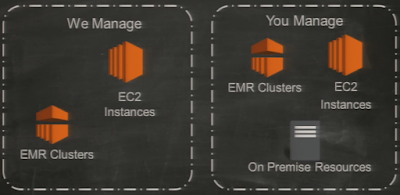

Data pipeline - managed resources

# Data Pipeline launches the resource when your pipeline needs it.

# Data Pipeline shuts it down when your pipeline is done with it

# Even easier to pay only for what you use.

Resources you manage

# Control by installing Java-based task agent that polls the web service for work

# Run whatever manual configuration you need on the hosts

# Run your Data Pipeline activities on existing resources - zero incremental footprint

# Run on on-premise resources.

On-premise resources

# Task agent works on any *nix machine with Java & Internet access

# Agent includes logic to run local software or copy data between on-premise and AWS.

# Agent activities are scheduled & managed just like any other activity.

Supported Activities

# Arbitrary Linux application - anything that you can run from the shell

# Copies between different data source combinations

# SQL queries

# User-defined Amazon EMR jobs

Preconditions

# Hosted by AWS Data PipelinesAmazon S3 Files/Directories exist

Amazon DynamoDB tables exist

Amazon RDS queries

Amazon Redshift queries

# Hosted by you

JDBC queries against on-premise databases return results

Custon scripts

Retries & Notifications

# RetriesDefault 3 attempts/activity

Configurable max-time to try (lateness)

# Configurable Amazon SNS notification for:

Success

Late

Failure (attempts exhausted)

Pricing

On-demand pricingPay for what you use

No minimum commitment or upfront fees

Use case

Content targeting# Managing a website with premium content

# Goal: Improve business by better targeting content

# Second goal: don't break/rewrite the website

# Third goal: lower costs!

How Data pipeline can help (the plan)

1. Merge geolookup data with server logs to figure out what people look at by region.

2. Merge event data with user demographics to figure out what types of users do what things.

3. Put results into the database for programmatic consumption by web servers

4. Display results as a reporting dashboard and generates automation reports for a business owner.

Building the pipeline

Step 1

Step 2 & 3

Step 4

Creating Data Pipeline

1. Data node# Name

# Type (DynamoDBDataNode, MySqlDataNode, S3DataNode)

# Schedule

2. Activity

# Name

# Type (CopyActivity, EmrActivity, HiveActivity, ShellCommandActivity)

# Schedule

Comments

Post a Comment